In this article

HBase, the Hadoop non-relational, distributed database, is fully supported in Stambia as explained in the presentation article.

You'll find below everything needed to begin using HBase in Stambia, from the Metadata creation to the first Mappings.

Prerequisites:You must install the Hadoop connector to be able to work with HBase.

Please refer to the following article that will guide you to accomplish this.

Metadata

The first step, when you want to work with HBase in Stambia DI, consists of creating and configuring the HBase Metadata.

Here is a summary of the main steps to follow:

- Creation of the HBase Metadata

- Configuration of the server properties

- (Optional) Configuration of the Kerberos security

- Reverse of the namespaces and tables

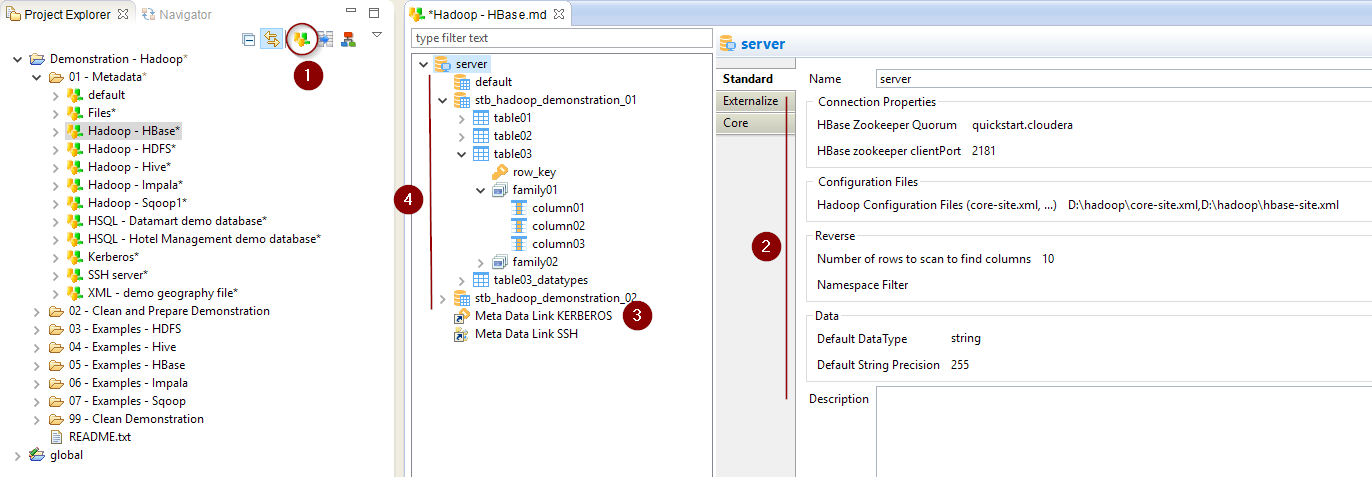

And here is an example of a common Metadata Configuration

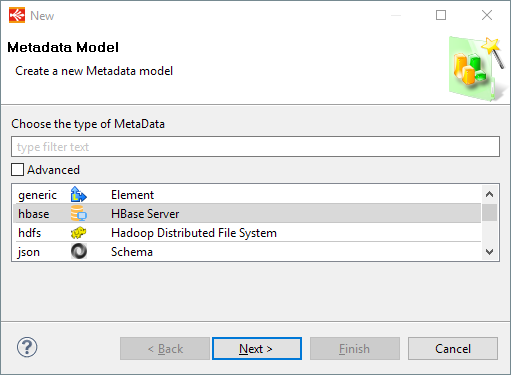

Metadata creation

Create first the HBase Metadata, as usual, by selecting the technology in the Metadata Creation Wizard:

Click next, choose a name and click on finish.

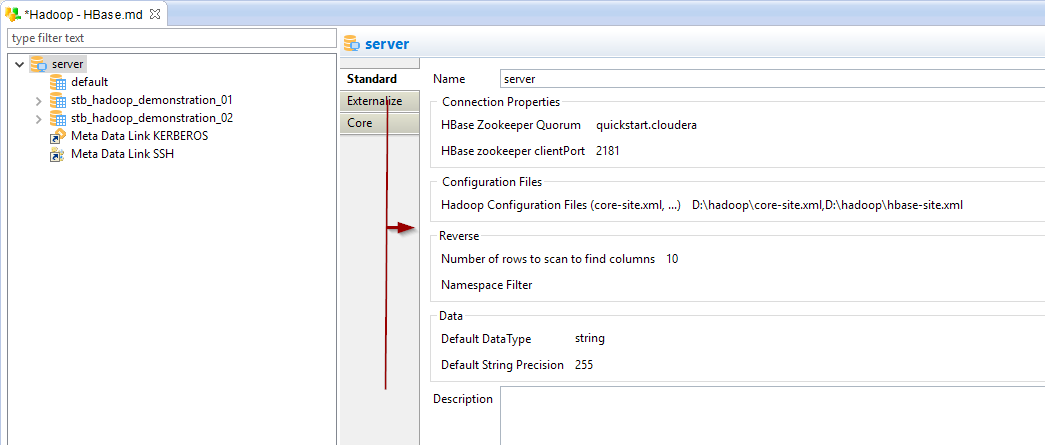

Configuration of the server properties

Your freshly created HBase Metadata being ready, you can now start configuring the server properties, which will define how to connect to HBase.

The following properties are available:

| Property | Mandatory | Description | Example |

| HBase Zookeeper Quorum | Yes | Comma separated list of HBase servers in the Zookeeper Quorum | quickstart.cloudera |

| HBase Zookeeper Client Port | Yes | Network port on which the client will connect | 2181 |

| Hadoop Configuration Files | Recommended |

Hadoop stores information about the services properties in configurations file such as core-site.xml and hbase-site.xml. These files are XML files containing a list of properties and information about the Hadoop server. Depending on the environment, network, and distributions, these files might be required to be able to contact and operate on HBase. There is therefore the possibility to specify these files in the Metadata to avoid network and connection issues, for instance. For this simply specifies them with a comma separated list of paths pointing to their location. They must be reachable by the Runtime. |

D:\hadoop\core-site.xml,D:\hadoop\hbase-site.xml |

| Number of rows to scan to find columns | No |

HBase doesn't store metadata about the columns that exist in a table. We therefore need to read rows from the table to find existing columns from data itself. This property allows to specify the number of lines that should be scanned to find the available columns to reverse. |

100 (default value if not set) |

| Namespace Filter | No |

Optional regular expression used to filter the namespaces to reverse. The '%' character can be used to define 'any character'. |

.* stb_hadoop_% default |

| Default DataType | No |

This property offers the possibility to define the default type to use, when the type is not defined on a column in the Metadata. Note that HBase stores everything as bytes and doesn't have a notion of datatypes. The datatypes specified in Stambia helps to define how to manipulate data when reading from HBase. See 'About Datatypes' further in this article for more information. |

string |

| Default String Precision | No |

Default precision (size) to be used for string columns. As for the Datatypes, this is used to help Stambia manipulating data when reading from HBase. |

255 |

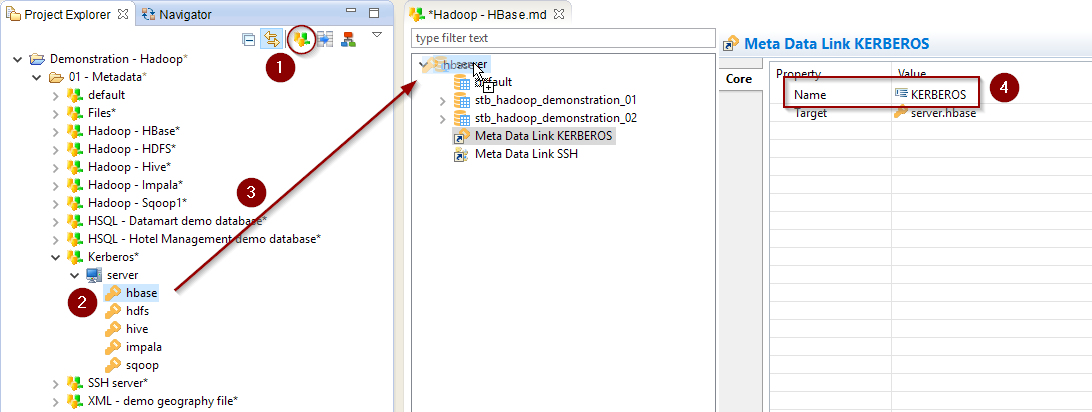

Configuration of the Kerberos Security

When working with Kerberos secured Hadoop clusters, connections will be protected, and you'll therefore need to specify in Stambia the credentials and necessary information to perform the Kerberos connection.

A Kerberos Metadata is available to specify everything required.

- Create a new Kerberos Metadata (or use an existing one)

- Define inside the Kerberos Principal to use for HBase

- Drag and drop it in the HBase Metadata

- Rename the Metadata Link to 'KERBEROS'

Refer to this dedicated article for further information about the Kerberos Metadata configuration

Reverse of the namespaces and tables

Your Metadata is now ready to begin reversing the existing namespaces and tables.

When performing the reverse, Stambia connects to HBase, retrieves the namespaces and tables, with their structures, and creates the associated Metadata.

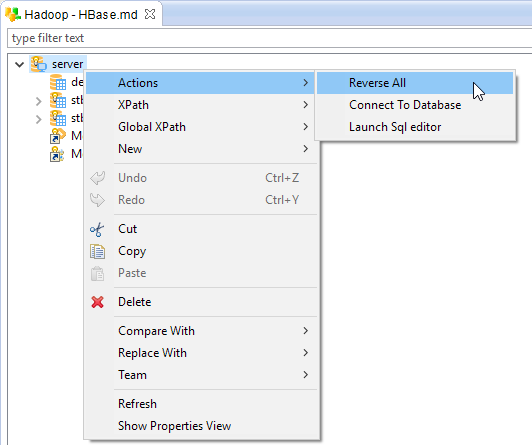

To reverse your objects simply right click on the desired node and choose Actions > Reverse [All]

- On the server node: all the namespaces and corresponding tables will be reversed

- On a namespace node: all the tables of the namespace will be reversed

- On a table: only the structure of the selected table is reversed

Example on the server, to reverse everything:

You can use the "Namespace Filter" property of the server node if you want to filter the namespaces to reverse

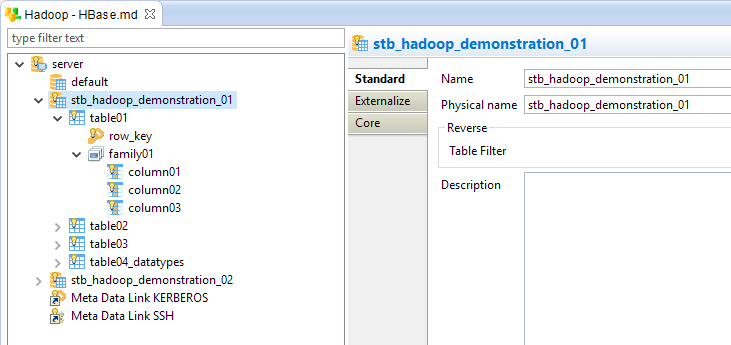

About the namespace node

The namespace node is the container for tables, and physically exists on the HBase server.

The following properties are available:

| Property | Description | Example |

| Name | Logical label used in Stambia to identify the namespace node | namespace01 |

| Physical name | Real name on the HBase server | namespace01 |

| Table Filter |

Optional regular expression used to filter the tables to reverse when performing a reverse on this namespace. The '%' character can be used to define 'any character'. |

.* table% myTable_% |

You can use the right click > Actions > Reverse menu to reverse the tables of the selected namespace

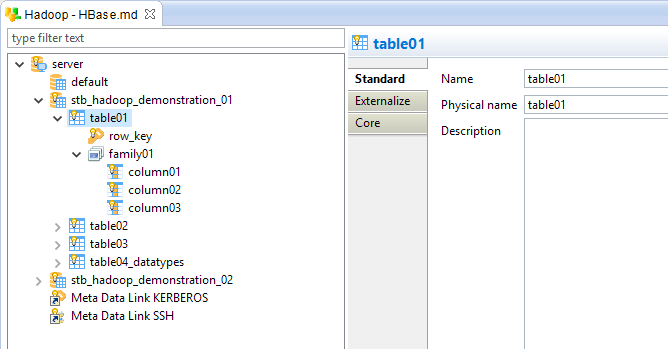

About the table node

The table node represents an HBase table, which contains families, containing themselves columns.

The following properties are available on the table:

| Property | Description | Example |

| Name | Logical label used in Stambia to identify the table node | table01 |

| Physical name | Real name on the HBase server | table01 |

The following properties are available on a family:

| Property | Description | Example |

| Name | Logical label used in Stambia to identify the family node | family01 |

| Physical name | Real name on the HBase server | family01 |

The following properties are available on the columns:

| Property | Description | Example |

| Name | Logical label used in Stambia to identify the column node | column01 |

| Physical name | Real name on the HBase server | column01 |

| Type |

Datatype representing the data contained in this column. Note that HBase stores everything as bytes and doesn't have a notion of datatypes. The datatypes specified in Stambia helps to define how to manipulate data when reading from HBase. See 'About Datatypes' further in this article for more information. If not set the 'Default DataType' specified on the server node is used. |

string |

| Precision |

Precision (size) of the data contained in this column for the specified type. |

255 |

| Scale |

Scale to use for the specified datatype. It represents the number of digits for numeric datatypes. |

10 |

You can use the right click > Actions > Reverse menu to reverse automatically the structure of the selected table

About columns

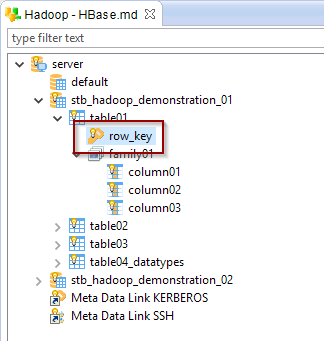

Row Key

In HBase, every row is uniquely identified by a 'Row Key', which is a special field associated to each row inserted.

We decided in Stambia to represent it as a specific column, to offer the possibility to fill its value at write and read.

This special column is created automatically at reverse by Stambia, and is represented with a little key icon

If needed, you can also create it manually, this is simply a column which must be named 'row_key', and in which the Advanced/Is Row Key property is checked.

Note that only one column should be set as Row Key, and we advise to let Stambia create it automatically at reverse to avoid any issue.

Columns

In HBase the columns contained in a row are not pre-defined.

Meaning that there can be different number of columns from row to row, which can be completely different.

When reversing the columns, Stambia scans the number of rows specified on the server node to find all the columns available in these.

As a consequence, the reversed Metadata might not contain all the existing columns, depending if they were present in the scanned rows or not.

If needed, you can easily add new columns manually in the Metadata with a right click > New Column menu on a family.

The columns are dynamically managed by HBase when inserting data.

About datatypes

HBase stores everything as bytes in its storage system and it doesn't have a notion of datatypes for the columns.

We decided in Stambia to offer the possibility to specify datatypes on the columns to ease data manipulation between different technologies.

This helps Stambia to have an idea of what is contained in a column, to treat it correctly when reading data from HBase.

This helps also to define the correct SQL types and size in the temporary objects created and manipulated when loading data from HBase into other database systems such as Teradata, Oracle, Microsoft SQL Server...

About HBase importtsv tool

HBase is usually shipped with a native command line tool called 'importtsv'.

This tool is used to load data from HDFS into HBase and is optimized for having good performances.

The Stambia templates offer the possibility to use this method to load data into HBase.

For more information, please refer to the following article which explains how to configure the Metadata and Mappings to use it.

Creating your first Mappings

Your Metadata being ready and your tables reversed, you can now start creating your first Mappings.

For the basics HBase is not different than any other database you could usually use in Stambia.

Drag and drop your source and target and map the columns as usual.

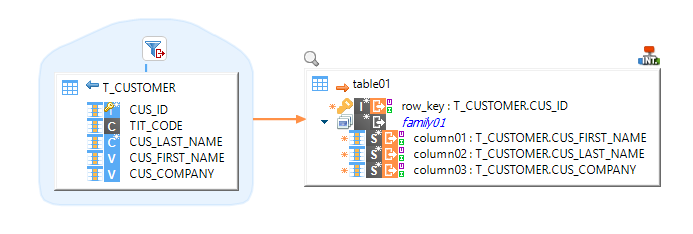

Example of Mapping loading data from HSQL to HBase:

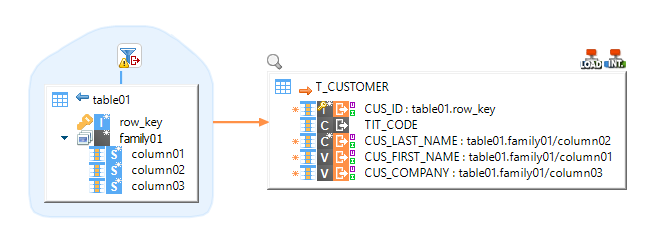

Example of Mapping loading data from HBase to HSQL, with a filter on HBase:

Filters must use the HBase filter syntax, such as SingleColumnValueFilter ('family01', 'column02', = , 'binary:gibbs').

Refer to the HBase documentation and examples in the Demonstration Project for further information.

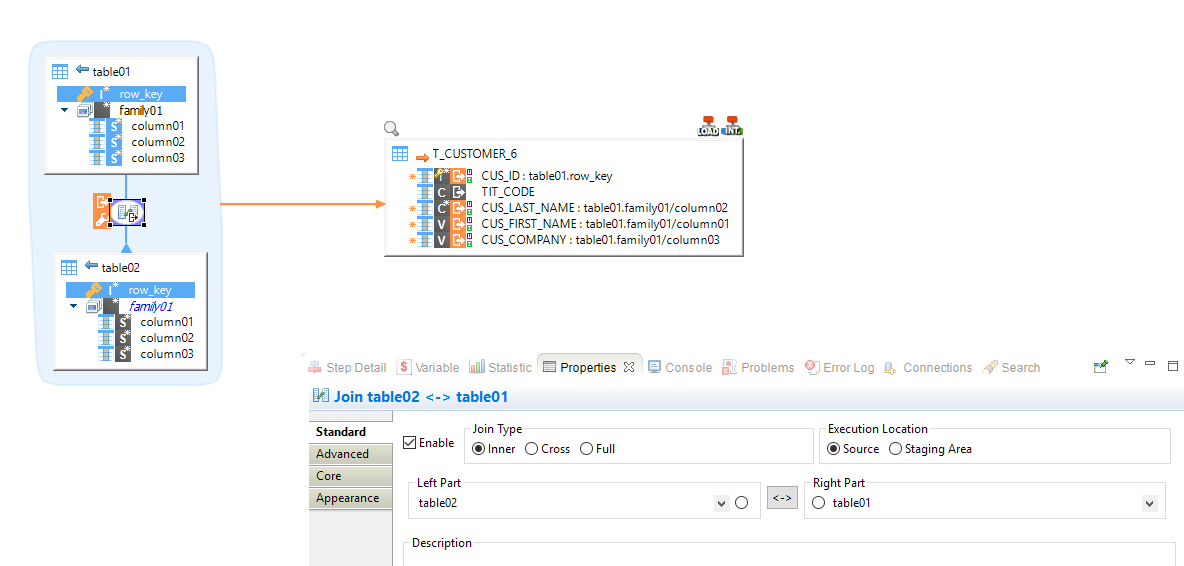

Example of a join between two HBase tables to load a target HSQL table:

The join order defined with the Left Part / Right Part of the properties is important.

Notice the little triangle on the joined table link (table02) in the Mapping.

-> In this example we are retrieving all the table01 rows from which the Row Key exists in the table02.

You cannot use the columns of the joined table (table02) in the target table, the joined table is only used as a 'lookup' table.

Managing HBase objects with the Stambia Tools

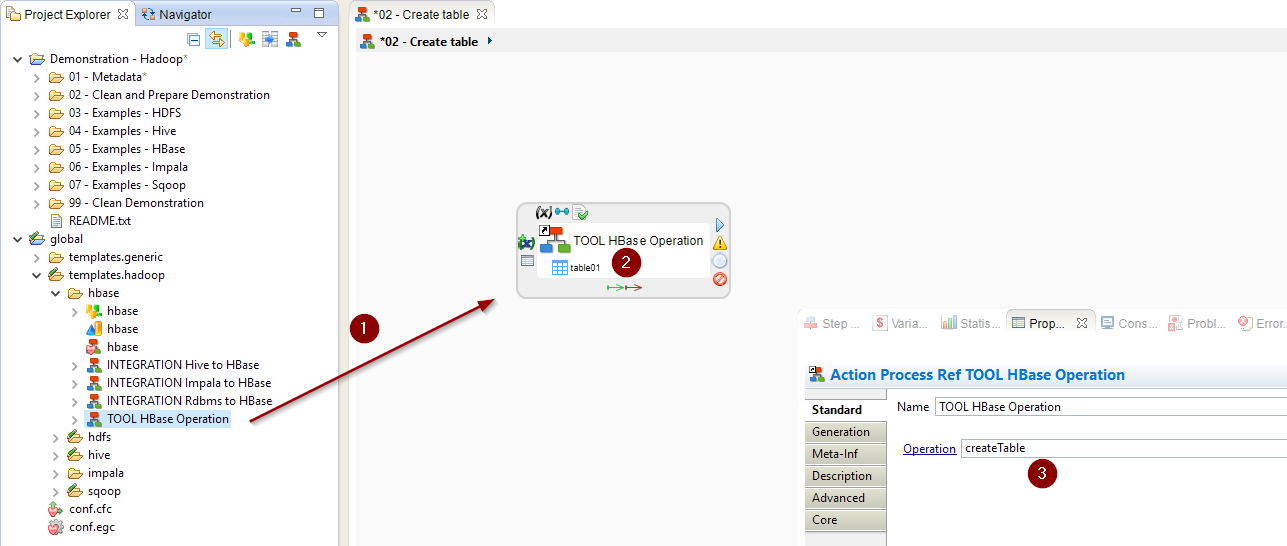

You have at your disposal in the HBase templates a Process Tool that offers the possibility to perform management operations on HBase, such as creating namespaces or tables, enabling or disabling tables, ...

Using it is quite simple:

- Drag and drop the 'TOOL HBase Operation' in a Process

- Drag and drop the HBase object on which you want to perform the operation on

- Specify the operation

Demonstration Project

The Hadoop demonstration project that you can find on the download page contains HBase examples.

Do not hesitate to have a look at this project to find samples and examples on how to use HBase.